|

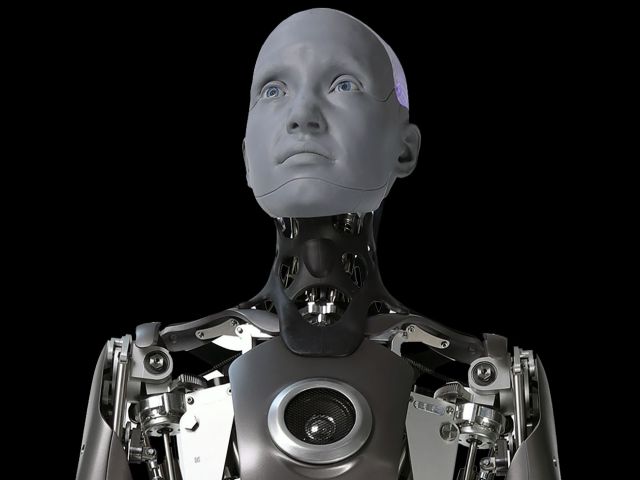

Bild: Ian Maddox, CC BY-SA 4.0 , via Wikimedia Commons

|

|

Tesla Autopilot is a suite of advanced driver-assistance system features offered by Tesla that has lane centering, traffic-aware cruise control, self-parking, automatic lane changes, semi-autonomous navigation on limited access freeways, and the ability to summon the car from a garage or parking spot. In all of these features, the driver is responsible and the car requires constant supervision. The company claims the features reduce accidents caused by driver negligence and fatigue from long-term driving.[1][2] In October 2020, Consumer Reports called Tesla Autopilot „a distant second“ (behind Cadillac’s Super Cruise), although it was ranked first in the „Capabilities and Performance“ and „Ease of Use“ category.[3]

As an upgrade to the base Autopilot capabilities, the company’s stated intent is to offer full self-driving (FSD) at a future time, acknowledging that legal, regulatory, and technical hurdles must be overcome to achieve this goal.[4] As of April 2020, most experts believe that Tesla vehicles lack the necessary hardware for full self-driving.[5] Tesla was ranked last by Navigant Research in March 2020 for both strategy and execution in the autonomous driving sector.[6] In October 2020 Tesla released a „beta“ version of its FSD software to a small group of testers in the United States.[7][8] Many industry observers criticized Tesla’s decision to use untrained consumers to validate the beta software as dangerous and irresponsible.[9][10][11]

History[edit]

|

|

This section may require cleanup to meet Wikipedia’s quality standards. The specific problem is: This section could use some of the attention that other sections are enjoying… Please help improve this section if you can. (February 2021) (Learn how and when to remove this template message)

|

Elon Musk first discussed the Autopilot system publicly in 2013, noting „Autopilot is a good thing to have in planes, and we should have it in cars.“[12]

All Tesla cars manufactured between September 2014 and October 2016 had the initial hardware (HW1) that supported Autopilot.[13] On 14.10.2014, Tesla offered customers the ability to pre-purchase Autopilot capability within a „Tech Package“ option. At that time Tesla stated Autopilot would include semi-autonomous drive and parking capabilities,[14][15][16] and was not designed for self-driving.[17]

Tesla Inc. developed initial versions of Autopilot in partnership with the Israeli company Mobileye.[18] Tesla and Mobileye ended their partnership in July 2016.[19][20]

Software enabling Autopilot was released in mid-October 2015 as part of Tesla software version 7.0.[21] At that time, Tesla announced its goal to offer self-driving technology.[22] Software version 7.1 then removed some features – to discourage customers from engaging in risky behavior – and added the Summon remote-parking capability that can move the car forward and backward under remote human control without a driver in the car.[23][24][25]

In March 2015, speaking at an Nvidia conference, Musk stated:

- „I don’t think we have to worry about autonomous cars because it’s a sort of a narrow form of AI. It’s not something I think is very difficult. To do autonomous driving that is to a degree much safer than a person, is much easier than people think.“[26] „… I almost view it like a solved problem.“[27]

In December 2015 Musk predicted „complete autonomy“ by 2018.[28]

On 16.08.2016, Elon Musk announced Autopilot 8.0, which processes radar signals to create a coarse point cloud similar to lidar to help navigate in low visibility, and even to „see“ in front of the car ahead of the Tesla car.[29][30] In November 2016 Autopilot 8.0 was updated to have a more noticeable signal to the driver that it is engaged and to require drivers to touch the steering wheel more frequently.[31][32] By November 2016, Autopilot had operated actively on HW1 vehicles for 300 million miles (500 million km) and 1.3 billion miles (2 billion km) in „shadow“ (not active) mode.[33]

Tesla states that as of October 2016, all new vehicles come with the necessary sensors and computing hardware, known as hardware version 2 (HW2), for future full self-driving.[34] Tesla used the term „Enhanced Autopilot“ (EA) to refer to HW2 capabilities that were not available in hardware version 1 (HW1), which include the ability to automatically change lanes without requiring driver input, to transition from one freeway to another, and to exit the freeway when your destination is near.[35]

Autopilot software for HW2 cars came in February 2017. It included traffic-aware cruise control, autosteer on divided highways, autosteer on ‚local roads‘ up to a speed of 35 mph or a specified number of mph over the local speed limit to a maximum of 45 mph.[36] Software version 8.1 for HW2 arrived in June 2017, adding a new driving-assist algorithm, full-speed braking and handling parallel and perpendicular parking.[37] Later releases offered smoother lane-keeping and less jerky acceleration and deceleration.

In August 2017, Tesla announced that HW2.5 included a secondary processor node to provide more computing power and additional wiring redundancy to slightly improve reliability; it also enabled dashcam and sentry mode capabilities.[38][39]

In March or April 2019, Tesla began fitting a new version of the „full self-driving computer“, also known as hardware 3 (HW3), which has two Tesla-designed microprocessors.[40][41][42]

In April 2019, Tesla started releasing an update to Navigate on Autopilot, which does not require lane change confirmation, but does require the driver to have hands on the steering wheel.[43] The car will navigate freeway interchanges on its own, but the driver needs to supervise. The ability is available to those who have purchased Enhanced Autopilot or Full Self Driving Capability.

In May 2019, Tesla provided an updated Autopilot in Europe, to comply with new UN/ECE R79 regulation related to Automatically commanded steering function.[44][45]

In September 2019, Tesla released software version 10 to early access users,[46][47] featuring improvements in driving visualization and automatic lane changes.

In February 2019, Musk stated that Tesla’s Full Self Driving capability would be „feature complete“ by the end of 2019.[48][49]

I think we will be feature complete, full self-driving, this year. Meaning the car will be able to find you in a parking lot, pick you up and take you all the way to your destination without an intervention. This year. I would say I am of certain of that, that is not a question mark. However, people sometimes will extrapolate that to mean now it works with 100% certainty, requiring no observation, perfectly, this is not the case.

— Elon Musk[50]

As of 19.12.2019, Tesla’s order page for „Full Self-Driving Capability“ stated:

-

Coming later this year:[51][52]

- Recognize and respond to traffic lights and stop signs

- Automatic driving on city streets.

In January 2020, he pushed back his projection to be feature complete to the end of 2020, and added that feature complete „doesn’t mean that features are working well.“[53]

In April 2020, Tesla released a „beta“ feature to recognize and respond to stop signs and traffic lights.[54]

In July 2020, industry experts said that Musk and Tesla’s autonomous driving plans lack credibility, and their goal of achieving level 5 autonomy by the end of 2020 isn’t feasible.[55]

In August 2020, Musk stated that 200 software engineers, 100 hardware engineers and 500 „labelers“ are working on Autopilot.[56]

In September 2020, Tesla reintroduced the term „Enhanced Autopilot“ for a subset of features which provides full autopilot on highways, parking, and summon.[57][58] Compared to this, Full Self Driving adds automation on city roads with traffic lights.[59]

In October 2020, Tesla introduced to Early Access Program (EAP) testers automatic driving on city streets.[60][61]

Driving features[edit]

Tesla’s Autopilot can be classified as level 2 under the SAE International six levels (0 to 5) of vehicle automation.[62] At this level, the car can act autonomously, but requires the driver to monitor the driving at all times and be prepared to take control at a moment’s notice.[63][64] Tesla’s owner’s manual states that Autopilot should not be used on city streets or on roads where traffic conditions are constantly changing;[65][66][67] however, some current full self-driving capabilities („traffic and stop sign control (beta)“), and future full self-driving capabilities („autosteer on city streets“) are advertised for city streets.[68]

| Hardware | Year | Function and description | Requirements |

|---|---|---|---|

| HW1,2,3 | 2014 | Over-the-air software updates. Autopilot updates received as part of recurring Tesla software updates. | |

| HW1,2,3 | 2014 | Safety features. If Autopilot detects a potential front or side collision with another vehicle, bicycle or pedestrian within a distance of 525 feet (160 m), it sounds a warning.[70] Autopilot also has automatic emergency braking that detects objects that may hit the car and applies the brakes, and the car may also automatically swerve out of the way to prevent a collision. | |

| HW1,2,3 | 2014 | Visualization. Autopilot includes a video display of some of what it sees around it. It displays driving lanes and vehicles in front, behind and on either side of it (in other lanes). It also displays lane markings and speed limits (via its cameras and what it knows from maps). On HW3, it displays stop signs and traffic signals. It distinguishes pedestrians, bicyclists/motorcyclists, small cars, and larger SUVs/trucks. | |

| HW1,2,3 | 2014[17][71][72] | Traffic-aware cruise control.[73] Also known as adaptive cruise control, the ability to maintain a safe distance from the vehicle in front of it by accelerating and braking as that vehicle speeds up and slows down. It also slows on tight curves, on interstate ramps, and when another car enters or exits the road in front of the car. It can be enabled at any speed between 0 mph and 90 mph. By default, it sets the limit at the current speed limit plus/minus a driver-specified offset, then adjusts its target speed according to changes in speed limits. If road conditions warrant, Autosteer and cruise control disengage and an audio and visual signal indicate that the driver must assume full control. | |

| HW1,2,3 | 2014[17][71][72] | Autosteer. Steers the car to remain in whatever lane it is in (also known as lane-keeping). It is able to safely change lanes when the driver taps the turn signal stalk.[74] On divided highways, HW2 and HW3 cars limit use of the feature to 90 mph (145 km/h), and on non-divided highways the limit is five miles over the speed limit or 45 mph (72 km/h) if no speed limit is detected.[75] If the driver ignores three audio warnings about controlling the steering wheel within an hour, Autopilot is disabled until a new journey is begun.[76] | |

| HW1,2,3 | 2014[77][78][79] | Lane departure warning | |

| HW1,2,3 | 2014 | Autopilot Maximum speed. In 2017, changed to 90 mph (140 km/h).[80][81] | |

| HW1,2,3 | 2014 | Speed Assist. Front-facing cameras detect speed limit signs and display the current limit on the dashboard or center display. Limits are compared against GPS data if no signs are present or if the vehicle is HW2 or HW2.5.[70] | |

| HW1,2,3 | 2014[17][71][72] | Basic summon. Moves car into and out of a tight space using the Tesla phone app or key fob without the driver in the car.[82][83] | EA or FSD |

| HW1,2,3 | 2015[84][85][86][71][72] | Autopark. Parks the car in perpendicular or parallel parking spaces, with either nose or tail facing outward; driver does not need to be in the car.[87][88] | EA or FSD |

| HW1,2,3 | 2014[89] | Forward Collision Warning | |

| HW2,3 | 2016[90][71][91][72] | Navigate on Autopilot (Beta). Navigates on-ramp to off-ramp: executes automatic lane changes, moves to a more appropriate lane based on speed, navigates freeway interchanges, and exits a freeway.[92][93] | EA or FSD |

| HW2,3 | 2018[94] | Obstacle-Aware Acceleration | |

| HW2,3 | 2019[95] | Blind Spot Collision Warning Chime | |

| HW1,2,3 | 2019[96] | Lane Departure Avoidance | |

| HW2,3 | 2019[96] | Emergency Lane Departure Avoidance | |

| HW2,3 | 2019[97] | Smart Summon. Enables supervised 150-foot line-of-sight remote car retrieval on private property (for example, parking lots) using the Tesla phone app; the car will navigate around obstacles.[98][99][100] | EA or FSD |

| HW2,3 | 2019[43] | Automatic lane change. Driver initiates the lane changing signal when safe, then the system does the rest.[101] Autosteer is disabled upon a manual lane change. | EA or FSD |

| HW3 | 2019[72][71][17] | Full Self-Driving hardware. Tesla has made claims since 2016[102][103][104] that all new HW2 vehicles have the hardware necessary for full self driving; however free[105] hardware upgrades have been required.[106] (An upgrade from HW 2 or 2.5 to HW3 is free to those who have purchased FSD.[107][108]) Tesla is claiming that the current software will be upgraded to provide full self driving (at an unknown future date, without any need for additional hardware).[109][110] | |

| HW3 | 2019[111] | Traffic light/stop sign recognition | FSD |

| HW3 | 2020[72][112] | Traffic Light and Stop Sign Control (Beta).[72][112] When using Traffic-Aware Cruise Control or Autosteer, this feature will stop for stop signs and all non-green traffic lights.[113] No confirmation may be needed for driving straight through green lights.[114] Chime on green traffic light.[115] | FSD |

| HW3 | 2020[116][114][117] | Autosteer on city streets (Beta; currently only for Early Access Program testers) | FSD |

| HW3 | 2020[116][114][117] | Full Self-Driving (Beta; currently only for Early Access Program testers) | FSD |

Hardware[edit]

Summary[edit]

| Name | Autopilot hardware 1 | Enhanced Autopilot hardware 2.0[a] | Enhanced Autopilot hardware 2.5 (HW2.5)[b] | Full self-driving computer (FSD) hardware 3[c] | |

|---|---|---|---|---|---|

| Hardware | Hardware 1 | Hardware 2[71] | Hardware 3 | ||

| Initial availability date | 2014 | October 2016 | August 2017 | April 2019 | |

| Computers | |||||

| Platform | MobilEye EyeQ3[120] | NVIDIA DRIVE PX 2 AI computing platform[121] | NVIDIA DRIVE PX 2 with secondary node enabled[39] | Two identical Tesla-designed processors | |

| Sensors | |||||

| Forward Radar | 160 m (525 ft)[69] | 170 m (558 ft)[69] | |||

| Front / Side Camera color filter array | N/A | RCCC[69] | RCCB[69] | ||

| Forward Cameras | 1 monochrome with unknown range |

3:

|

|||

| Forward Looking Side Cameras | N/A |

|

|||

| Rearward Looking Side Cameras | N/A |

|

|||

| Sonars | 12 surrounding with 5 m (16 ft) range | 12 surrounding with 8 m (26 ft) range | |||

- Notes

- ^ All cars sold after October 2016 are equipped with Hardware 2.0, which includes eight cameras (covering a complete 360° around the car), one forward-facing radar, and twelve sonars (also covering a complete 360°). Buyers may choose an extra-cost option to purchase either the „Enhanced Autopilot“ or „Full Self-Driving“ to enable features. Front and side collision mitigation features are standard on all cars.[118]

- ^ Also known as „Hardware 2.1“; includes added computing and wiring redundancy for improved reliability.[39]

- ^ Tesla described the previous computer as a supercomputer capable of full self driving.[119]

Hardware 1[edit]

Vehicles manufactured after late September 2014 are equipped with a camera mounted at the top of the windshield, forward looking radar[122][123] in the lower grille and ultrasonic acoustic location sensors in the front and rear bumpers that provide a 360-degree view around the car. The computer is the Mobileye EyeQ3.[124] This equipment allows the Tesla Model S to detect road signs, lane markings, obstacles, and other vehicles.

Auto lane change can be initiated by the driver turning on the lane changing signal when safe (due to ultrasonic 16-foot limited range capability), and then the system completes the lane change.[101] In 2016 the HW1 did not detect pedestrians or cyclists,[125] and while Autopilot detects motorcycles,[126] there have been two instances of HW1 cars rear-ending motorcycles.[127]

Upgrading from Hardware 1 to Hardware 2 is not offered as it would require substantial work and cost.[128]

Hardware 2[edit]

HW2, included in all vehicles manufactured after October 2016, includes an Nvidia Drive PX 2[129] GPU for CUDA based GPGPU computation.[130][131] Tesla claimed that HW2 provided the necessary equipment to allow full self-driving capability at SAE Level 5. The hardware includes eight surround cameras and 12 ultrasonic sensors, in addition to forward-facing radar with enhanced processing capabilities.[132] The Autopilot computer is replaceable to allow for future upgrades.[133] The radar is able to observe beneath and ahead of the vehicle in front of the Tesla;[134] the radar can see vehicles through heavy rain, fog or dust.[135] Tesla claimed that the hardware was capable of processing 200 frames per second.[136]

When „Enhanced Autopilot“ was enabled in February 2017 by the v8.0 (17.5.36) software update, testing showed the system was limited to using one of the eight onboard cameras—the main forward-facing camera[137] The v8.1 software update released a month later enabled a second camera, the narrow-angle forward-facing camera.[138]

Hardware 2.5[edit]

In August 2017, Tesla announced that HW2.5 included a secondary processor node to provide more computing power and additional wiring redundancy to slightly improve reliability; it also enabled dashcam and sentry mode capabilities.[38][39]

Hardware 3[edit]

According to Tesla’s director of Artificial Intelligence Andrej Karpathy, Tesla had as of Q3 2018 trained large neural networks that works very well but which could not be deployed to Tesla vehicles built up to that time due to their insufficient computational resources. HW3 provides the necessary resources to run these neural networks.[139]

HW3 includes a custom Tesla-designed system on a chip. Tesla claimed that the new system processes 2,300 frames per second (fps), which is a 21x improvement over the 110 fps image processing capability of HW2.5.[140][141] The firm described it as a „neural network accelerator“.[136] Each chip is capable of 36 trillion operations per second, and there are two chips for redundancy.[142] The company claimed that HW3 was necessary for „full self-driving“, but not for „enhanced Autopilot“ functions.[143]

The first availability of HW3 was April 2019.[144] Customers with HW2 or HW2.5 who purchased the Full Self-Driving (FSD) package are eligible for an upgrade to HW3 without cost.[145]

Tesla claims HW3 has 2.5× improved performance over HW2.5 with 1.25× higher power and 0.2× lower cost. HW3 features twelve ARM Cortex-A72 CPUs operating at 2.6 GHz, two Neural Network Accelerators operating at 2 GHz and a Mali GPU operating at 1 GHz.[146]

Future development[edit]

According to Elon Musk, full autonomy is „really a software limitation: The hardware exists to create full autonomy, so it’s really about developing advanced, narrow AI for the car to operate on.“[147][148] The Autopilot development focus is on „increasingly sophisticated neural nets that can operate in reasonably sized computers in the car“.[147][148] According to Musk, „the car will learn over time“, including from other cars.[149]

Full self-driving[edit]

Approach[edit]

Tesla’s approach to try to achieve full self-driving (FSD) is to mimic how a human learns to drive,[citation needed] that is, by training a neural network using the behavior of hundreds of thousands of Tesla drivers,[150] and (from a sensor perspective), by relying chiefly on visible light cameras supplemented by radar and information from components used for other purposes in the car (the coarse-grained two-dimensional maps used for navigation; the ultrasonic sensors used for parking, etc.)[151][152] Tesla has made a deliberate decision to not use lidar, which Elon Musk has called „stupid, expensive and unnecessary.“[153] This makes Tesla’s approach markedly different from that of other companies like Waymo and Cruise which are training their neural networks using the behavior of highly trained drivers,[154][155] and are additionally relying on highly detailed (centimeter-scale) three-dimensional maps and lidar in their autonomous vehicles.[152][156][157][158][159] Tesla claims that although its approach is more difficult, it will ultimately be more useful, because its vehicles will be able to self-drive without geofencing concerns.[160][161] Musk has argued that a system that relies on centimeter-scale three-dimensional maps is „extremely brittle“ because it is not able to adapt when the physical environment changes.[162]

Tesla’s software has been trained based on 3 billion miles driven by Tesla vehicles on public roads, as of April 2020.[163][164] Alongside tens of millions of miles on public roads,[165] competitors have trained their software on tens of billions of miles in computer simulations, as of January 2020.[166] Musk has argued that simulated miles are not able to capture the „weirdness“ of the real world, and therefore will not be sufficient to train the software.[162]

In terms of computing hardware, Tesla designed a self-driving computer chip that has been installed in its cars since March 2019[167] and also developed a neural network training supercomputer;[168][169] other vehicle automation companies such as Waymo regularly use custom chipsets and neural networks as well.[170][171]

Criticism[edit]

Critics say that Tesla’s self-driving strategy is dangerous, and was abandoned by other companies years ago.[172][173][174] Most experts believe that Tesla’s approach of trying to achieve full self-driving by eschewing high-definition maps and lidar is not feasible.[175][176] Auto analyst Brad Templeton has criticized Tesla’s approach by arguing, „The no-map approach involves forgetting what was learned before and doing it all again.“[177] In a March 2020 study by Navigant Research, Tesla was ranked last for both strategy and execution in the autonomous driving sector.[178] Some news reports in 2019 state „practically everyone views [lidar] as an essential ingredient for self-driving cars“[5] and „experts and proponents say it adds depth and vision where camera and radar alone fall short.“[179] However, in 2019 researchers at Cornell University „[u]sing two inexpensive cameras on either side of a vehicle’s windshield … have discovered they can detect objects with nearly lidar’s accuracy and at a fraction of the cost,“[180][181] and Tesla also claims it has achieved close to lidar accuracy using just cameras.[182]

FSD Beta and deployment schedule[edit]

At the end of 2016, Tesla expected to demonstrate full autonomy by the end of 2017,[183][184] and in April 2017, Musk predicted that in around two years, drivers would be able to sleep in their vehicle while it drives itself.[185] In 2018 Tesla revised the date to demonstrate full autonomy to be by the end of 2019.[186] In January 2020, Musk claimed the full self-driving software would be „feature complete“ by the end of 2020, adding that feature complete „doesn’t mean that features are working well.“[187] Musk later stated that Tesla would provide SAE Level 5 autonomy by the end of 2021[188][189] and that Tesla plans to release a monthly subscription package for FSD in 2021.[190]

On 20.10.2020, Tesla released a „beta“ version of its full self-driving software to Early Access Program testers, a small group of users in the United States.[117][7][8] Musk stated that the testing „[w]ill be extremely slow [and] cautious“ and „be limited to a small number of people who are expert & careful drivers“.[7] As of January 2021, the number of testers is said to be „nearly 1,000“.[191] The release of the beta program has renewed concern regarding whether the technology is ready for testing on public roads.[192][193] In March 2021, Elon Musk announced via twitter that the FSD beta program will double in participant size with the next release.[citation needed]

Comparisons[edit]

In 2018, Consumer Reports rated Tesla Autopilot as second best out of four (Cadillac, Tesla, Nissan, Volvo) „partially automated driving systems“.[194] Autopilot scored highly for its capabilities and ease of use, but was worse at keeping the driver engaged than the other manufacturers‘ systems.[194] Consumer Reports also found multiple problems with Autopilot’s automatic lane change function, such as cutting too closely in front of other cars and passing on the right.[195]

In 2018, the Insurance Institute for Highway Safety compared Tesla, BMW, Mercedes and Volvo „advanced driver assistance systems“ and stated that the Tesla Model 3 experienced the fewest incidents of crossing over a lane line, touching a lane line, or disengaging.[196]

In February 2020, Car and Driver compared Cadillac Super Cruise, Comma.ai and Autopilot.[197] They called Autopilot „one of the best“, highlighting its user interface and versatility, but criticizing it for swerving abruptly.

In June 2020, Digital Trends compared Cadillac Super Cruise self-driving and Tesla Autopilot.[198] The conclusion: „Super Cruise is more advanced, while Autopilot is more comprehensive.“

In October 2020, the European New Car Assessment Program gave the Tesla Model 3 Autopilot a score of „moderate“.[199]

Also in October 2020, Consumer Reports evaluated 17 driver assistance systems, and concluded that Tesla Autopilot was „a distant second“ behind Cadillac’s Super Cruise.[3][200]

In February 2021, a MotorTrend review compared Super Cruise and Autopilot and said Super Cruise was better.[201]

Safety concerns[edit]

The National Transportation Safety Board criticized Tesla Inc.’s lack of system safeguards in a fatal 2018 Autopilot crash in California.[202] The NTSB has criticized Tesla for failing to foresee and prevent „predictable abuse“ of Autopilot.[203][204] The Center for Auto Safety and Consumer Watchdog have called for federal and state investigations into Autopilot and Tesla’s marketing of the technology, which they believe is „dangerously misleading and deceptive“, giving consumers the false impression that their vehicles are self-driving or autonomous.[205][206] UK safety experts have called Tesla’s Autopilot „especially misleading.“[207] A 2019 IIHS study showed that the name „Autopilot“ causes more drivers to misperceive behaviors such as texting or taking a nap to be safe, versus similar level 2 driver-assistance systems from other car companies.[208] Tesla’s Autopilot and full self-driving features have also been criticized in a May 2020 report on „autonowashing: the greenwashing of vehicle automation“.[209]

Driver monitoring[edit]

Drivers have been found sleeping at the wheel and driving under the influence of alcohol with Autopilot engaged.[210][211] Tesla hasn’t implemented more robust driver monitoring features that could prevent distracted and inattentive driving.[212]

Detecting stationary vehicles at speed[edit]

Autopilot may not detect stationary vehicles; the manual states: „Traffic-Aware Cruise Control cannot detect all objects and may not brake/decelerate for stationary vehicles, especially in situations when you are driving over 50 mph (80 km/h) and a vehicle you are following moves out of your driving path and a stationary vehicle or object is in front of you instead.“[213] This has led to numerous crashes with stopped emergency vehicles.[214][215][216][217] This is the same problem that any car equipped with just adaptive cruise control or automated emergency braking has (for example, Volvo Pilot Assist).[213]

Dangerous and unexpected behavior[edit]

In a 2019 Bloomberg survey, hundreds of Tesla owners reported dangerous behaviors with Autopilot, such as phantom braking, veering out of lane, or failing to stop for road hazards.[218] Autopilot users have also reported the software crashing and turning off suddenly, collisions with off ramp barriers, radar failures, unexpected swerving, tailgating, and uneven speed changes.[219]

Ars Technica notes that the brake system tends to initiate later than some drivers expect.[220] One driver claimed that Tesla’s Autopilot failed to brake, resulting in collisions, but Tesla pointed out that the driver deactivated the cruise control of the car prior to the crash.[221] Ars Technica also notes that while lane changes may be semi-automatic (if Autopilot is on, and the vehicle detects slow moving cars or if it is required to stay on route, the car may automatically change lanes without any driver input), the driver must show the car that he or she is paying attention by touching the steering wheel before the car makes the change.[222] In 2019, Consumer Reports found that Tesla’s automatic lane-change feature is „far less competent than a human driver“.[223]

Regulation[edit]

A spokesman for the U.S. National Highway Traffic Safety Administration (NHTSA) said that „any autonomous vehicle would need to meet applicable federal motor vehicle safety standards“ and the NHTSA „will have the appropriate policies and regulations in place to ensure the safety of this type of vehicles.“[224]

Court cases[edit]

Tesla’s Autopilot was the subject of a class action suit brought in 2017 that claimed the second-generation Enhanced Autopilot system was „dangerously defective.“[225] The suit was settled in 2018; owners who had paid US$5,000 (equivalent to $5,327 in 2019) in 2016 and 2017 to equip their cars with the updated Autopilot software were compensated between US$20 and US$280 for the delay in implementing Autopilot 2.0.[226]

In July 2020, a German court ruled that Tesla made exaggerated promises about its Autopilot technology, and that the „Autopilot“ name created the false impression that the car can drive itself.[227]

Safety statistics[edit]

Million miles driven between accidents with different levels of Autopilot and active safety features

In 2016, data after 47 million miles of driving in Autopilot mode showed the probability of an accident was at least 50% lower when using Autopilot.[228] During the investigation into the fatal crash of May 2016 in Williston, Florida, NHTSA released a preliminary report in January 2017 stating „the Tesla vehicles‘ crash rate dropped by almost 40 percent after Autosteer installation.“[229][230]:10 Disputing this, in 2019, a private company, Quality Control Systems, released their report analyzing the same data, stating the NHTSA conclusion was „not well-founded“.[231] Quality Control Systems‘ analysis of the data showed the crash rate (measured in the rate of airbag deployments per million miles of travel) actually increased from 0.76 to 1.21 after the installation of Autosteer.[232]:9

In February 2020, Andrej Karpathy, Tesla’s head of AI and computer vision, stated that: Tesla cars have driven 3 billion miles on Autopilot, of which 1 billion have been driven using Navigate on Autopilot; Tesla cars have performed 200,000 automated lane changes; and 1.2 million Smart Summon sessions have been initiated with Tesla cars.[233] He also states that Tesla cars are avoiding pedestrian accidents at a rate of tens to hundreds per day.[234]

Tesla’s self-reported quarterly summary statistics for vehicle safety are:[2]

| Safety Category | 2018 Q3 | 2018 Q4 | 2019 Q1 | 2019 Q2 | 2019 Q3 | 2019 Q4 | 2020 Q1 | 2020 Q2 | 2020 Q3 | 2020 Q4 |

|---|---|---|---|---|---|---|---|---|---|---|

| One accident for every X million miles driven in which drivers had Autopilot engaged. | 3.34 | 2.91 | 2.87 | 3.27 | 4.34 | 3.07 | 4.68 | 4.53 | 4.59 | 3.45 |

| One accident for every X million miles driven in which drivers did not have Autopilot engaged, but with active safety features engaged. | 1.92 | 1.58 | 1.76 | 2.19 | 2.70 | 2.10 | 1.99 | 2.27 | 2.42 | 2.05 |

| One accident for every X million miles driven without Autopilot engaged and also without active safety features engaged. | 2.02 | 1.25 | 1.26 | 1.41 | 1.82 | 1.64 | 1.42 | 1.56 | 1.79 | 1.27 |

Fatal crashes[edit]

As of January 2021, there have been six recorded fatalities involving Tesla’s Autopilot, though several other incidents where Autopilot use was suspected remain outstanding.[235]

Handan, China (16.01.2016)[edit]

On 16.01.2016, the driver of a Tesla Model S in Handan, China, was killed when his car crashed into a stationary truck.[236] The Tesla was following a car in the far left lane of a multi-lane highway; the car in front moved to the right lane to avoid a truck stopped on the left shoulder, and the Tesla, which the driver’s father believes was in Autopilot mode, did not slow before colliding with the stopped truck.[237] According to footage captured by a dashboard camera, the stationary street sweeper on the left side of the expressway partially extended into the far left lane, and the driver did not appear to respond to the unexpected obstacle.[238]

In September 2016, the media reported the driver’s family had filed a lawsuit in July against the Tesla dealer who sold the car.[239] The family’s lawyer stated the suit was intended „to let the public know that self-driving technology has some defects. We are hoping Tesla when marketing its products, will be more cautious. Do not just use self-driving as a selling point for young people.“[237] Tesla released a statement which said they „have no way of knowing whether or not Autopilot was engaged at the time of the crash“ since the car telemetry could not be retrieved remotely due to damage caused by the crash.[237] In 2018, the lawsuit was stalled because telemetry was recorded locally to a SD card and was not able to be given to Tesla, who provided a decoding key to a third party for independent review. Tesla stated that „while the third-party appraisal is not yet complete, we have no reason to believe that Autopilot on this vehicle ever functioned other than as designed.“[240] Chinese media later reported that the family sent the information from that card to Tesla, which admitted autopilot was engaged two minutes before the crash.[241] Tesla since then removed the term „Autopilot“ from its Chinese website.[242]

Williston, Florida (16.05.2016)[edit]

The first U.S. Autopilot fatality took place in Williston, Florida, on 16.05.2016. The driver was killed in a crash with an 18-wheel tractor-trailer. By late June 2016, the U.S. National Highway Traffic Safety Administration (NHTSA) opened a formal investigation into the fatal autonomous accident, working with the Florida Highway Patrol. According to the NHTSA, preliminary reports indicate the crash occurred when the tractor-trailer made a left turn in front of the 2015 Tesla model S at an intersection on a non-controlled access highway, and the car failed to apply the brakes. The car continued to travel after passing under the truck’s trailer.[243][244][245] The diagnostic log of the Tesla indicated it was traveling at a speed of 74 mi/h (119 km/h) when it collided with and traveled under the trailer, which was not equipped with a side underrun protection system.[246]:12 The underride collision sheared off the Tesla’s glasshouse, destroying everything above the beltline, and caused fatal injuries to the driver.[246]:6–7; 13 In the approximately nine seconds after colliding with the trailer, the Tesla traveled another 886.5 feet (270.2 m) and came to rest after colliding with two chain-link fences and a utility pole.[246]:7; 12

The NHTSA’s preliminary evaluation was opened to examine the design and performance of any automated driving systems in use at the time of the crash, which involves a population of an estimated 25,000 Model S cars.[247] On 16.07.2016, the NHTSA requested Tesla Inc. to hand over to the agency detailed information about the design, operation and testing of its Autopilot technology. The agency also requested details of all design changes and updates to Autopilot since its introduction, and Tesla’s planned updates scheduled for the next four months.[248]

According to Tesla, „neither autopilot nor the driver noticed the white side of the tractor-trailer against a brightly lit sky, so the brake was not applied.“ The car attempted to drive full speed under the trailer, „with the bottom of the trailer impacting the windshield of the Model S.“ Tesla also stated that this was Tesla’s first known Autopilot-related death in over 130 million miles (208 million km) driven by its customers while Autopilot was activated. According to Tesla there is a fatality every 94 million miles (150 million km) among all type of vehicles in the U.S.[243][244][249] It is estimated that billions of miles will need to be traveled before Tesla Autopilot can claim to be safer than humans with statistical significance. Researchers say that Tesla and others need to release more data on the limitations and performance of automated driving systems if self-driving cars are to become safe and understood enough for mass-market use.[250][251]

The truck’s driver told the Associated Press that he could hear a Harry Potter movie playing in the crashed car, and said the car was driving so quickly that „he went so fast through my trailer I didn’t see him.“ „It was still playing when he died and snapped a telephone pole a quarter-mile down the road.“ According to the Florida Highway Patrol, they found in the wreckage an aftermarket portable DVD player. It is not possible to watch videos on the Model S touchscreen display.[245][252] A laptop computer was recovered during the post-crash examination of the wreck, along with an adjustable vehicle laptop mount attached to the front passenger’s seat frame. The NHTSA concluded the laptop was probably mounted and the driver may have been distracted at the time of the crash.[246]:17–19; 21

Collision Between a Car Operating With Automated Vehicle Control Systems and a Tractor-Semitrailer Truck Near Williston, Florida | 17.05.2017 | Accident Report NTSB/HAR-17/02 PB2017-102600[253]:33

In January 2017, the NHTSA Office of Defects Investigations (ODI) released a preliminary evaluation, finding that the driver in the crash had seven seconds to see the truck and identifying no defects in the Autopilot system; the ODI also found that the Tesla car crash rate dropped by 40 percent after Autosteer installation,[229][230] but later also clarified that it did not assess the effectiveness of this technology or whether it was engaged in its crash rate comparison.[254] The NHTSA Special Crash Investigation team published its report in January 2018.[246] According to the report, for the drive leading up to the crash, the driver engaged Autopilot for 37 minutes and 26 seconds, and the system provided 13 „hands not detected“ alerts, to which the driver responded after an average delay of 16 seconds.[246]:24 The report concluded „Regardless of the operational status of the Tesla’s ADAS technologies, the driver was still responsible for maintaining ultimate control of the vehicle. All evidence and data gathered concluded that the driver neglected to maintain complete control of the Tesla leading up to the crash.“[246]:25 In July 2016, the U.S. National Transportation Safety Board (NTSB) announced it had opened a formal investigation into the fatal accident while Autopilot was engaged. The NTSB is an investigative body that only has the power to make policy recommendations. An agency spokesman said, „It’s worth taking a look and seeing what we can learn from that event, so that as that automation is more widely introduced we can do it in the safest way possible.“ The NTSB opens annually about 25 to 30 highway investigations.[255] In September 2017, the NTSB released its report, determining that „the probable cause of the Williston, Florida, crash was the truck driver’s failure to yield the right of way to the car, combined with the car driver’s inattention due to overreliance on vehicle automation, which resulted in the car driver’s lack of reaction to the presence of the truck. Contributing to the car driver’s overreliance on the vehicle automation was its operational design, which permitted his prolonged disengagement from the driving task and his use of the automation in ways inconsistent with guidance and warnings from the manufacturer.“[256]

Mountain View, California (18.03.2018)[edit]

Post-crash scene on US 101 in Mountain View, 18.03.2018

On 18.03.2018, a second U.S. Autopilot fatality occurred in Mountain View, California.[257] The crash occurred just before 9:30 A.M. on southbound US 101 at the carpool lane exit for southbound Highway 85, at a concrete barrier where the left-hand carpool lane offramp separates from 101. After the Model X crashed into the narrow concrete barrier, it was struck by two following vehicles, and then it caught on fire.[258]

Both the NHTSA and NTSB are investigating the March 2018 crash.[259] Another driver of a Model S demonstrated that Autopilot appeared to be confused by the road surface marking in April 2018. The gore ahead of the barrier is marked by diverging solid white lines (a vee-shape) and the Autosteer feature of the Model S appeared to mistakenly use the left-side white line instead of the right-side white line as the lane marking for the far left lane, which would have led the Model S into the same concrete barrier had the driver not taken control.[260] Ars Technica concluded „that as Autopilot gets better, drivers could become increasingly complacent and pay less and less attention to the road.“[261]

In a corporate blog post, Tesla noted the impact attenuator separating the offramp from US 101 had been previously crushed and not replaced prior to the Model X crash on March 23.[257][262] The post also stated that Autopilot was engaged at the time of the crash, and the driver’s hands had not been detected manipulating the steering wheel for six seconds before the crash. Vehicle data showed the driver had five seconds and a 150 metres (490 ft) „unobstructed view of the concrete divider, … but the vehicle logs show that no action was taken.“[257] The NTSB investigation had been focused on the damaged impact attenuator and the vehicle fire after the collision, but after it was reported the driver had complained about the Autopilot functionality,[263] the NTSB announced it would also investigate „all aspects of this crash including the driver’s previous concerns about the autopilot.“[264] A NTSB spokesman stated the organization „is unhappy with the release of investigative information by Tesla“.[265] Elon Musk dismissed the criticism, tweeting that NTSB was „an advisory body“ and that „Tesla releases critical crash data affecting public safety immediately & always will. To do otherwise would be unsafe.“[266] In response, NTSB removed Tesla as a party to the investigation on April 11.[267]

NTSB released a preliminary report on 18.06.2018, which provided the recorded telemetry of the Model X and other factual details. Autopilot was engaged continuously for almost nineteen minutes prior to the crash. In the minute before the crash, the driver’s hands were detected on the steering wheel for 34 seconds in total, but his hands were not detected for the six seconds immediately preceding the crash. Seven seconds before the crash, the Tesla began to steer to the left and was following a lead vehicle; four seconds before the crash, the Tesla was no longer following a lead vehicle; and during the three seconds before the crash, the Tesla’s speed increased to 70.8 mi/h (113.9 km/h). The driver was wearing a seatbelt and was pulled from the vehicle before it was engulfed in flames.[268]

The crash attenuator had been previously damaged on March 12 and had not been replaced at the time of the Tesla crash.[268] The driver involved in the accident on March 12 collided with the crash attenuator at more than 75 mph (121 km/h) and was treated for minor injuries; in comparison, the driver of the Tesla collided with the collapsed attenuator at a slower speed and died from blunt force trauma. After the accident on March 12, the California Highway Patrol failed to report the collapsed attenuator to Caltrans as required. Caltrans was not aware of the damage until March 20, and the attenuator was not replaced until March 26 because a spare was not immediately available.[269]:1–4 This specific attenuator had required repair more often than any other crash attenuator in the Bay Area, and maintenance records indicated that repair of this attenuator was delayed by up to three months after being damaged.[269]:4–5 As a result, the NTSB released a Safety Recommendation Report on 19.09.2019, asking Caltrans to develop and implement a plan to guarantee timely repair of traffic safety hardware.[270]

At a NTSB meeting held on 20.02.2020, the board concluded the crash was caused by a combination of the limitations of the Tesla Autopilot system, the driver’s over-reliance on Autopilot, and driver distraction likely from playing a video game on his phone. The vehicle’s ineffective monitoring of driver engagement was cited as a contributing factor, and the inoperability of the crash attenuator contributed to the driver’s injuries.[271] As an advisory agency, NTSB does not have regulatory power; however, NTSB made several recommendations to two regulatory agencies. The NTSB recommendations to the NHTSA included: expanding the scope of the New Car Assessment Program to include testing of forward collision avoidance systems; determining if „the ability to operate [Tesla Autopilot-equipped vehicles] outside the intended operational design domain pose[s] an unreasonable risk to safety“; and developing driver monitoring system performance standards. The NTSB submitted recommendations to the OSHA relating to distracted driving awareness and regulation. In addition, NTSB issued recommendations to manufacturers of portable electronic devices (to develop lock-out mechanisms to prevent driver-distracting functions) and to Apple (banning the nonemergency use of portable electronic devices while driving).[272]

Several NTSB recommendations previously issued to NHTSA, DOT, and Tesla were reclassified to „Open—Unacceptable Response“. These included H-17-41[273] (recommendation to Tesla to incorporate system safeguards that limit the use of automated vehicle control systems to design conditions) and H-17-42[274] (recommendation to Tesla to more effectively sense the driver’s level of engagement).[272]

Kanagawa, Japan (18.04.2018)[edit]

On 18.04.2018, a Tesla Model X operating on Autopilot struck and killed a pedestrian in Kanagawa, Japan, after the driver had fallen asleep.[275] According to a lawsuit filed in federal court in Northern California in April 2020, the Tesla Model X accelerated from 24 km/h to approximately 38 km/h prior to impact, and then crashed into a van and motorcycles, killing a 44-year-old man.[276] The lawsuit claims the accident occurred due to flaws in Tesla’s Autopilot system, such as inadequate monitoring to detect inattentive drivers and an inability to handle unforeseen traffic situations.[277]

Delray Beach, Florida (19.03.2019)[edit]

In the morning of 19.03.2019, a Tesla Model 3 driving southbound on US 441/SR 7 in Delray Beach, Florida struck a semi-trailer truck that was making a left-hand turn to northbound SR 7 out of a private driveway at Pero Family Farms; the Tesla underrode the trailer, and the force of the impact sheared off the greenhouse of the Model 3, resulting in the death of the Tesla driver.[278] The driver of the Tesla had engaged Autopilot approximately 10 seconds before the collision and preliminary telemetry showed the vehicle did not detect the driver’s hands on the wheel for the eight seconds immediately preceding the collision.[279] The driver of the semi-trailer truck was not cited.[280] Both the NHTSA and NTSB dispatched investigators to the scene.[281]

In May 2019 the NTSB issued a preliminary report that determined that neither the driver of the Tesla or the Autopilot system executed evasive maneuvers.[282] The circumstances of this crash were similar to the fatal underride crash of a Tesla Model S in 2016 near Williston, Florida; in its 2017 report detailing the investigation of that earlier crash, NTSB recommended that Autopilot be used only on limited-access roads (i.e., freeway),[253]:33 which Tesla did not implement.[283]

Arendal, Norway (20.05.2020)[edit]

A truck driver standing on the road next to his semi-trailer (which was partially off the road) was struck and killed by a Tesla.[284] The Tesla’s driver has been charged with negligent homicide and, early in the trial, an expert witness testified that the car’s computer indicates Autopilot was engaged at the time of the incident.[284] A forensic scientist said the killed man was less visible because he was in the shadow of the trailer.[285] The driver said he had both hands on the wheel,[285] and that he was vigilant.[284] As of December 2020, the Accident Investigation Board Norway is still investigating.[284]

Non-fatal crashes[edit]

Culver City, California (18.01.2018)[edit]

On 18.01.2018, a 2014 Tesla Model S crashed into a fire truck parked on the side of the I-405 freeway in Culver City, California, while traveling at a speed exceeding 50 mph (80 km/h) and the driver survived with no injuries.[286] The driver told the Culver City Fire Department that he was using Autopilot. The fire truck and a California Highway Patrol vehicle were parked diagonally across the left emergency lane and high-occupancy vehicle lane of the southbound 405, blocking off the scene of an earlier accident, with emergency lights flashing.[287]

According to a post-accident interview, the driver stated he was drinking coffee, eating a bagel, and maintaining contact with the steering wheel while resting his hand on his knee.[288]:3 During the 30-mile (48 km) trip, which lasted 66 minutes, the Autopilot system was engaged for slightly more than 29 minutes; of the 29 minutes, hands were detected on the steering wheel for only 78 seconds in total. Hands were detected applying torque to the steering wheel for only 51 seconds over the nearly 14 minutes immediately preceding the crash.[288]:9 The Tesla had been following a lead vehicle in the high-occupancy vehicle lane at approximately 21 mph (34 km/h); when the lead vehicle moved to the right to avoid the fire truck, approximately three or four seconds prior to impact, the Tesla’s traffic-aware cruise control system began to accelerate the Tesla to its preset speed of 80 mph (130 km/h). When the impact occurred, the Tesla had accelerated to 31 mph (50 km/h).[288]:10 The Autopilot system issued a forward collision warning half a second before the impact, but did not engage the automatic emergency braking (AEB) system, and the driver did not manually intervene by braking or steering. Because Autopilot requires agreement between the radar and visual cameras to initiate AEB, the system was challenged due to the specific scenario (where a lead vehicle detours around a stationary object) and the limited time available after the forward collision warning.[288]:11

Several news outlets started reporting that Autopilot may not detect stationary vehicles at highway speeds and it cannot detect some objects.[289] Raj Rajkumar, who studies autonomous driving systems at Carnegie Mellon University, believes the radars used for Autopilot are designed to detect moving objects, but are „not very good in detecting stationary objects“.[290] Both NTSB and NHTSA dispatched teams to investigate the crash.[291] Hod Lipson, director of Columbia University’s Creative Machines Lab, faulted the diffusion of responsibility concept: „If you give the same responsibility to two people, they each will feel safe to drop the ball. Nobody has to be 100%, and that’s a dangerous thing.“[292]

In August 2019, the NTSB released its accident brief for the accident. HAB-19-07 concluded the driver of the Tesla was at fault due to „inattention and overreliance on the vehicle’s advanced driver assistance system“, but added the design of the Tesla Autopilot system „permitted the driver to disengage from the driving task“.[288]:13–14 After the earlier crash in Williston, the NTSB issued a safety recommendation to „[d]evelop applications to more effectively sense the driver’s level of engagement and alert the driver when engagement is lacking while automated vehicle control systems are in use.“ Among the manufacturers that the recommendation was issued to, only Tesla has failed to issue a response.[288]:12–13

South Jordan, Utah (18.05.2018)[edit]

In the evening of 18.05.2018, a 2016 Tesla Model S with Autopilot engaged crashed into the rear of a fire truck that was stopped in the southbound lane at a red light in South Jordan, Utah, at the intersection of SR-154 and SR-151.[293][294] The Tesla was moving at an estimated 60 mi/h (97 km/h) and did not appear to brake or attempt to avoid the impact, according to witnesses.[295][296] The driver of the Tesla, who survived the impact with a broken foot, admitted she was looking at her phone before the crash.[293][297] The NHTSA dispatched investigators to South Jordan.[298] According to telemetry data recovered after the crash, the driver repeatedly did not touch the wheel, including during the 80 seconds immediately preceding the crash, and only touched the brake pedal „fractions of a second“ before the crash. The driver was cited by police for „failure to keep proper lookout“.[293][299] The Tesla had slowed to 55 mi/h (89 km/h) to match a vehicle ahead of it, and after that vehicle changed lanes, accelerated to 60 mi/h (97 km/h) in the 3.5 seconds preceding the crash.[300]

Tesla CEO Elon Musk criticized news coverage of the South Jordan crash, tweeting that „a Tesla crash resulting in a broken ankle is front page news and the ~40,000 people who died in US auto accidents alone in [the] past year get almost no coverage“, additionally pointing out that „[a]n impact at that speed usually results in severe injury or death“, but later conceding that Autopilot „certainly needs to be better & we work to improve it every day“.[298] In September 2018, the driver of the Tesla sued the manufacturer, alleging the safety features designed to „ensure the vehicle would stop on its own in the event of an obstacle being present in the path … failed to engage as advertised.“[301] According to the driver, the Tesla failed to provide an audible or visual warning before the crash.[300]

Moscow (19.08.2019)[edit]

On the night of 19.08.2019, a Tesla Model 3 driving in the left-hand lane on the Moscow Ring Road in Moscow, Russia crashed into a parked tow truck with a corner protruding into the lane and subsequently burst into flames.[302] According to the driver, the vehicle was traveling at the speed limit of 100 km/h (62 mph) with Autopilot activated; he also claimed his hands were on the wheel, but was not paying attention at the time of the crash. All occupants were able to exit the vehicle before it caught on fire; they were transported to the hospital. Injuries included a broken leg (driver) and bruises (his children).[303][304]

The force of the collision was enough to push the tow truck forward into the central dividing wall, as recorded by a surveillance camera. Passersby also captured several videos of the fire and explosions after the accident, these videos also show the tow truck that the Tesla crashed into had been moved, suggesting the explosions of the Model 3 happened later.[305][306]

Taiwan (20.06.2020)[edit]

Traffic cameras captured the moment when a Tesla slammed into an overturned cargo truck in Taiwan on 20.06.2020.[307] The driver was uninjured and told emergency responders that the car was in Autopilot mode.[307] The driver reportedly told authorities that he saw the truck and manually hit the brakes too late to avoid the crash, which is apparently indicated on the video by a puff of white smoke coming from the tires.[307]